WebArch and WEMI: Dialogue (ChatGPT)#

Worum geht es sachlich? Wir vermuten, dass FRBR WEMI geeignet ist, das httpRange-14 Problem etwas zu entspannen. (Texte zum Einlesen, wem die Thematik nicht geläufig ist: (1) webarch: Architecture of the World Wide Web, Volume One W3C Recommendation 15 December 2004 https://www.w3.org/TR/webarch/, about year 2000 ; (2) FRBR WEMI here: IFLA LRM, 2016 (originally FRBR, about 1996) https://en.wikipedia.org/wiki/IFLA_Library_Reference_Model ; (3) httpRange-14: https://www.ibiblio.org/hhalpin/homepage/notes/uri.html. Diese Texte wurden ChatGPT jedoch nicht explizit an die Hand gegeben, die Thematik ist der KI offensichtlich bekannt.)

Dieses Chat-Protokoll dokumentiert einen Versuch: Durch einen Dialog versuchen wir ChatGPT dazu zu bringen, unsere eigene Sichtweise der Dinge zu übernehmen und als Erklärtext ‘rauszuschreiben. Wir protokollieren hier Auszüge aus den Prompts und den Antworten von ChatGPT.

Der Aufsatz ist nachzulesen unter Article: Digital WEMI / httpRange-14

Vorgehen: Zuerst werfen wir Begriffe und Theorien in den Raum (“zero shot prompting”), schauen mal, was von ChatGPT aufgrund seines Hintergrundverständnisses so kommt. Passende Antworten lassen wir stehen, offensichtliche Fehler korrigieren wir. So bauen wir das Kontext-Fenster auf. Später geben wir eine detailliertere Anweisung, einen Aufsatz zu erstellen. Diese Anweisung korrigieren und ergänzen wir so lange, bis der Aufsatz einigermaßen „stimmt“. Dann geben wir Anweisungen, den Aufsatz hier und da zu ergänzen, zusätzliche Constraints zu berücksichtigen, ggf. weitere Theorien in Bezug zu setzen usw.

Wir benutzen folgende Boxes:

Prompt

Prompts im ChatGPT-Chatfenster

Hint

Metatext; Erklärung, was ChatGPT tut

Caution

Metatext; Kommentar von uns für den Leser, dass wir die Antwort von ChatGPT kritisch sehen.

What is a representation?#

Prompt

architecture of the web, tim berners lee. what is a representation?

In the context of web architecture, especially as described by Tim Berners-Lee and the W3C (World Wide Web Consortium), a representation refers to the concrete form of a resource that is transmitted over the web.

Let’s break that down a bit:

What is a Resource?#

A resource is anything that can be identified by a URI (Uniform Resource Identifier). This could be:

A web page (like

https://example.com/index.html)A photo, a video, or a document

An abstract concept (like “the weather in New York”)

A real-world object (like “Tim Berners-Lee” as a person)

So, What is a Representation?#

A representation is the actual data returned when you access a resource via its URI — like what the server sends back in response to your browser’s request.

That representation could be:

An HTML page

A JSON document

An XML file

An image, etc.

Each of these is just one possible representation of the resource.

Example:#

Resource:

https://example.com/catYou request it from a browser:

It returns an HTML page with info about a cat 🐱

You request it from an app using

Accept: application/json:It returns a JSON representation with cat data.

Both are different representations of the same resource.

Why is this important?#

Because the Web’s architecture emphasizes decoupling identity (URI) from the actual format or representation, which allows:

Flexibility (you can get different formats for different needs)

Interoperability (machines and humans can use the same resources differently)

Content negotiation (servers can serve the best format based on what the client asks for)

FRBR WEMI#

Prompt

let us relate this answer to another theory: FRBR WEMI.

Ah, very cool direction! FRBR and the Web architecture actually have a really interesting conceptual overlap, especially when it comes to the idea of representations and abstractions. Let’s walk through it:

Quick Recap: What is FRBR WEMI?#

FRBR (Functional Requirements for Bibliographic Records) is a conceptual model in library science that breaks down bibliographic resources into four levels:

Work — the abstract intellectual creation

e.g., Hamlet by Shakespeare

Expression — the realization of the work

e.g., a French translation of Hamlet

Manifestation — the physical or digital embodiment of the expression

e.g., a 2001 Penguin paperback of the French translation

Item — a single exemplar of that manifestation

e.g., your personal copy of that book on your shelf

WEMI = Work → Expression → Manifestation → Item

How This Maps to Web Architecture#

Let’s now relate WEMI to how Tim Berners-Lee and the Web Architecture treat resources and representations.

FRBR |

Web Architecture |

Example |

|---|---|---|

Work |

Abstract resource |

The concept of “Hamlet” (not tied to any format) |

Expression |

Resource with variants |

French, German, Modern English versions of Hamlet |

Manifestation |

URI-bound resource |

Specific eBook or edition, with its own URI |

Item |

Representation (retrieved) |

The HTML/JSON/PDF/etc. sent to the client upon request |

Deep Dive on the Key Analogy#

In Web architecture:

A URI identifies a resource, which could correspond to any WEMI level (usually work/expression).

The representation is what you actually get when you dereference the URI — that maps nicely to Item in FRBR: a specific, accessible form.

The same resource can have multiple representations (e.g., HTML, PDF, RDF) — this is similar to manifestations of an expression.

Why This Matters#

Understanding this analogy:

Helps librarians and knowledge managers design linked data systems (like in libraries and archives).

Informs semantic web development — where resources (e.g., “Shakespeare”) need to have clear identifiers and multiple forms for both humans and machines.

Encourages clean URI design, separating what a thing is from how it’s shown — just like WEMI separates the essence from the edition.

Digital WEMI#

Prompt

Lets define a “Digital WEMI”:

two different languages – natural languages same as modelling language like UML, BPMN, also programming language – result in two different expressions. in rdf the expression is the abstract rdf graph.

each expression may have manifestations. different formats like html, pdf, xml, ttl result in different manifestations. in rdf the manifestation is the concrete rdf graph in a specific format.

So, again: what is the relationship between resource and representation in terms of digital wemi?

Now we’re talking — this is a rich conceptual space. Let’s take this slow and crisp.

You’re drawing a parallel between Web Architecture’s resource/representation model and a digital interpretation of RDA WEMI, with special attention to RDF and representation formats. That’s gold. Here’s how we can tighten it up:

WEMI, Digitally Interpreted#

Let’s define a Digital WEMI, as you’ve started:

WEMI Level |

Digital Interpretation |

|---|---|

Work |

The abstract idea or intention (e.g., “a data model of a library system”) |

Expression |

A realization of that idea in a specific language or system (e.g., UML, RDF, JSON-LD, OWL, BPMN, or even natural language descriptions in English or French) |

Manifestation |

A concrete encoding of that expression in a particular format or syntax (e.g., RDF/XML, Turtle, JSON-LD, PDF, HTML) |

Item |

A specific instantiation of that manifestation — like a file on disk or a server response |

So yes — UML vs. RDF vs. English are different Expressions, and Turtle vs. RDF/XML vs. JSON-LD are different Manifestations of a single RDF-based Expression.

Web Architecture Recap#

In W3C’s Architecture of the Web, we have:

Resource — anything with identity (via URI)

Representation — a concrete byte stream returned upon dereferencing a URI (e.g., an HTML document, a PDF, etc.)

Content Negotiation — server provides different representations of the same resource depending on headers like

Accept,Accept-Language, etc.

Now, the Relationship#

Here’s how it all connects:

Concept |

Web Architecture |

Digital WEMI Equivalent |

|---|---|---|

Resource |

Identified by a URI |

A Work or Expression — depending on modeling choice |

Representation |

Byte stream served from URI |

A Manifestation (e.g., RDF/XML, Turtle, JSON-LD) |

Content Negotiation |

Mechanism to get different representations |

Access to different Manifestations of the same Expression |

Representation in RDF |

Serialised RDF graph (not the graph itself) |

The Manifestation of the abstract Expression (graph) |

Actual HTTP Response |

Byte-for-byte resource |

A specific Item (the file, the response, the download) |

Important Conceptual Clarification#

In RDF, the Expression is the abstract RDF graph (the logical structure of triples).

The Manifestation is the concrete RDF serialization (like Turtle, RDF/XML, etc.).

So when you dereference a URI and get back, say, RDF in Turtle format:

You are being served a Representation

That corresponds to a Manifestation

Of an Expression (the RDF graph)

Of a Work (some higher-level conceptual entity)

Summary Statement#

In digital WEMI, the Expression is the abstract logical form (like an RDF graph), and each Manifestation is a concrete representation of that expression in a specific format.

In web architecture, a Resource maps to a Work or Expression, and a Representation maps to a Manifestation.

Thus, the Representation is to the Resource what the Manifestation is to the Expression.

Let’s tighten and restate the key insight from our mapping in the most precise, text-based way:

The Core Relationship: Resource vs. Representation in Digital WEMI Terms#

A resource in Web Architecture corresponds to a Work or Expression in digital WEMI, depending on how abstract it is.

If the resource represents an idea (“climate data model”), it aligns with Work.

If it represents a specific realization in a modeling language (e.g., RDF graph, UML model), it’s closer to Expression.

A representation is the concrete serialized form of that resource at the time it’s accessed.

This aligns directly with Manifestation in digital WEMI: the concrete encoding of the expression in a given format (Turtle, RDF/XML, JSON-LD, etc.).

A specific copy of that representation (a file, a server response, a download) would be the Item.

RDF-Specific Clarity#

In RDF terms:

The abstract RDF graph = Expression (language-independent realization of a Work)

Turtle/RDF/XML/JSON-LD = Manifestations (serialized forms)

A .ttl file downloaded from a URI = Item (a specific copy of that Manifestation)

The URI itself = Resource (can denote either the Work or Expression depending on modeling)

Final Takeaway#

A representation is the actualization of a resource at the protocol level — what you get when you dereference a URI.

In digital WEMI terms, this is a Manifestation of an Expression that the resource stands for.

Let me know if you’d like to test this mapping on a concrete example (e.g., a dataset, ontology, publication, etc.).

httpRange-14 problem#

Prompt

Can you relate our findings to the httpRange-14 problem?

Yes — and now we’re getting to the heart of the semantic web’s philosophical growing pains. 🧠 The httpRange-14 issue is directly tied to everything we’ve discussed: What kind of thing does a URI identify? And how does the representation relate to the resource?

Let’s walk through how your digital WEMI model gives us a useful lens on this.

What is the httpRange-14 Problem?#

At its core, the httpRange-14 issue is a question Tim Berners-Lee raised in the early 2000s:

If I have a URI like

http://example.org/foaf#me, and I dereference it, and I get an RDF document…

Does that URI identify the document or the person?

And more generally:

Can a single URI denote a non-information resource (like a person, concept, or abstract Work),

and still return a representation (like RDF) over HTTP?

The Resolution (2005 TAG decision)#

The W3C TAG decided:

If the URI returns a 200 OK, it must identify an information resource (something you can retrieve completely).

If the URI returns a 303 redirect, it can denote a non-information resource (like a person or a Work).

The 303 redirect leads to a different URI that provides information about the original resource.

Now, Let’s Bring in Digital WEMI#

Your model — especially the distinction between Expression, Manifestation, and Item — gives us a new level of clarity.

Let’s say we have:

http://example.org/data-model→ a URI that denotes a Work or Expression (say, an RDF vocabulary)When we dereference that URI:

We get a Turtle document (a Manifestation)

Serialized as a byte stream (an Item)

So the WEMI mapping helps us answer:

Is the URI identifying the file, the RDF graph, or the thing the RDF graph describes?

Answer using Digital WEMI:

The URI identifies the Expression (or maybe the Work).

The RDF serialization is a Manifestation.

The downloaded response is the Item.

This shows that:

The representation (Manifestation) is not the same as the resource (Expression or Work).

Thus, the resource can be an abstract or non-information resource even if we dereference it — if we’re clear about the layers.

How WEMI Helps with httpRange-14#

Using digital WEMI:

Helps us distinguish what the URI identifies (Expression/Work) from what’s served (Manifestation/Item).

Supports the idea that representations don’t define the resource, just as Manifestations don’t define the Work.

Justifies the web architecture view that a representation can describe a resource without being the resource.

So the httpRange-14 distinction (information vs. non-information resource) fits cleanly with WEMI:

Work / Expression = Non-information resource (abstract, not retrievable in full)

Manifestation / Item = Information resource (serializable, retrievable)

PROV-O ontology#

Prompt

digital wemi: how does w3c prov-o ontology relate?

Great — this is the perfect next step. You’re asking: how does W3C PROV-O (Provenance Ontology) relate to the digital WEMI model we’ve been constructing?

Short version:

→ PROV-O helps you track the transformations between WEMI levels — especially how Expressions become Manifestations and how those Manifestations are realized as Items.

Let’s unpack that carefully.

PROV-O: Quick Primer#

W3C PROV-O is an ontology for describing provenance on the Web. Its core classes are:

prov:Entity: something that has fixed identity (a document, a file, a graph, etc.)prov:Activity: something that happens over time and causes changes (e.g., conversion, generation, publication)prov:Agent: someone or something responsible for activities (a person, software, organization)

And key relationships:

prov:wasGeneratedBy: anEntitywas created by anActivityprov:used: anActivityused anEntityprov:wasDerivedFrom: anEntitywas derived from anotherprov:wasAttributedTo: anEntityis linked to anAgent

Applying PROV-O to Digital WEMI#

Here’s how PROV-O fits into each transition in the WEMI chain:

1. Work → Expression#

This is often conceptual, but when modeled digitally (say, a conceptual model turned into an RDF graph), PROV-O might say:

Expression (RDF graph)prov:wasDerivedFromWork (conceptual description)The transformation could be described by an

Activitylikemodelling,translating, orabstracting

2. Expression → Manifestation#

This is a serialization process — the abstract graph is rendered in a concrete format.

Manifestation (e.g., Turtle file)prov:wasGeneratedBySerializationActivitySerializationActivityprov:usedtheExpression (abstract RDF graph)An

Agent(software, script, person)prov:wasAssociatedWiththe activity

This is a strong use case for PROV-O: capturing how serializations are made, when, by whom, and from what.

3. Manifestation → Item#

Here we’re talking about instantiation — a specific file, a copy on disk, or an HTTP response.

Item (e.g., file://data.ttl)prov:wasGeneratedByDeploymentActivityThat

Activityprov:usedtheManifestation (canonical Turtle)You might even use

prov:atLocationto indicate the file path or URL

PROV-O Complements Digital WEMI By…#

Making the transitions traceable — you can model exactly how one WEMI level led to another.

Tracking authorship and responsibility — who created the RDF graph? who serialized it?

Enabling reproducibility — you can say not just what the item is, but how it came to be.

Example in RDF Terms#

Here’s a simple RDF example:

:myGraph.ttl a prov:Entity ;

prov:wasGeneratedBy :serialize1 ;

prov:wasDerivedFrom :abstractGraph ;

prov:atLocation <file:///home/user/myGraph.ttl> .

:serialize1 a prov:Activity ;

prov:used :abstractGraph ;

prov:wasAssociatedWith :rdflibTool .

:rdflibTool a prov:SoftwareAgent ;

rdfs:label "RDFlib Python Library" .

This tracks that a Turtle file was generated from an abstract RDF graph using a particular tool.

Would you like to see how this could be extended to a whole pipeline (e.g., a modeling workflow from conceptual design to published web resource)?

Prompt

yes please

Perfect — let’s map out a full digital WEMI pipeline using PROV-O to trace how a concept becomes a downloadable file on the Web, step by step. This will help you model a complete digital production chain, with all the provenance baked in.

Full Pipeline: From Concept to Web Resource#

We’ll track a conceptual Work (e.g., a metadata model for a digital archive) that is:

Realized as an RDF graph (Expression)

Serialized as Turtle and RDF/XML files (Manifestations)

Published on the Web (Items)

Each step is modeled with PROV-O to show how WEMI levels evolve and how agents, activities, and entities are connected.

Step-by-Step Breakdown#

1. Conceptual Work#

:LibraryMetadataModel a prov:Entity ;

a :Work ;

rdfs:label "Library Metadata Model (Work)" ;

dct:creator :alice .

2. RDF Expression#

:LibraryRDFGraph a prov:Entity ;

a :Expression ;

rdfs:label "Library Metadata Model in RDF" ;

prov:wasDerivedFrom :LibraryMetadataModel ;

prov:wasGeneratedBy :RDFModelingActivity .

:RDFModelingActivity a prov:Activity ;

prov:used :LibraryMetadataModel ;

prov:wasAssociatedWith :alice .

3. Turtle Manifestation#

:LibraryTTLManifestation a prov:Entity ;

a :Manifestation ;

prov:wasDerivedFrom :LibraryRDFGraph ;

prov:wasGeneratedBy :Serialization1 ;

dct:format "text/turtle" ;

rdfs:label "Library Metadata Turtle File" .

:Serialization1 a prov:Activity ;

prov:used :LibraryRDFGraph ;

prov:wasAssociatedWith :rdflibTool .

4. Published File (Item)#

:LibraryTTLItem a prov:Entity ;

a :Item ;

prov:wasDerivedFrom :LibraryTTLManifestation ;

prov:wasGeneratedBy :UploadToWeb ;

prov:atLocation <https://example.org/lib-model.ttl> .

:UploadToWeb a prov:Activity ;

prov:used :LibraryTTLManifestation ;

prov:wasAssociatedWith :webUploaderAgent .

5. Agents#

:alice a prov:Person ;

foaf:name "Alice Smith" .

:rdflibTool a prov:SoftwareAgent ;

rdfs:label "RDFlib Python Library" .

:webUploaderAgent a prov:SoftwareAgent ;

rdfs:label "Web Uploader Script" .

What This Gives You#

Full WEMI mapping using PROV-O’s

Entity,Activity,AgentTraceable lineage from abstract concept to deployed resource

Reproducibility — anyone can see how the file came to be, who made it, what tools were used

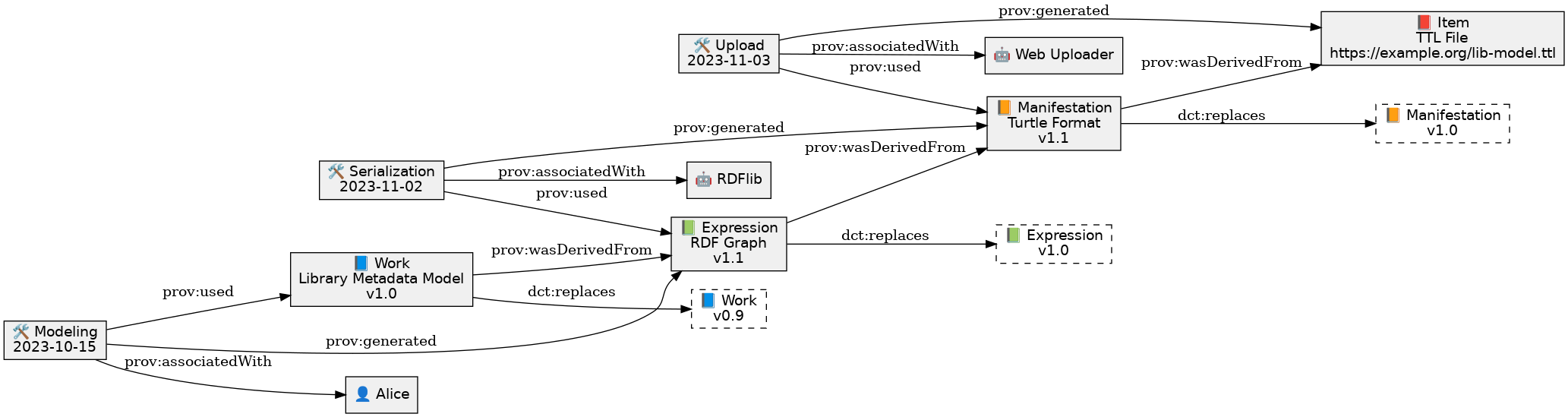

You can visualize this as a provenance graph where:

Nodes are entities (Work, Expression, Manifestation, Item)

Edges are activities and derivation paths

Agents are attached to activities

Want a SPARQL query to trace this lineage from item to work? Or a visual version in GraphViz syntax?

Prompt

yes please

Awesome — let’s generate both:

A SPARQL query that traces the provenance path of a published file (Item) back to its conceptual Work

A GraphViz DOT diagram to visualize the digital WEMI + PROV-O graph

1. SPARQL Query: Trace Provenance Path (Item → Work)#

This query assumes your data is in RDF and uses standard prov and rdfs predicates. It starts with a known Item (e.g. a published .ttl file) and walks back up the WEMI chain.

PREFIX prov: <http://www.w3.org/ns/prov#>

PREFIX rdfs: <http://www.w3.org/2000/01/rdf-schema#>

SELECT ?item ?manifestation ?expression ?work ?format ?location WHERE {

?item a :Item ;

prov:wasDerivedFrom ?manifestation ;

prov:atLocation ?location .

?manifestation a :Manifestation ;

rdfs:label ?format ;

prov:wasDerivedFrom ?expression .

?expression a :Expression ;

prov:wasDerivedFrom ?work .

?work a :Work ;

rdfs:label ?workLabel .

}

You can tweak this to include prov:wasGeneratedBy steps and prov:Agent info too if needed.

2. GraphViz DOT Diagram#

Here’s a simple textual DOT graph to visualize the WEMI + PROV-O process.

digraph DigitalWEMI_Provenance {

node [shape=box, style=filled, fillcolor="#f0f0f0"];

Work [label="📘 Work\nLibrary Metadata Model"];

Expression [label="📗 Expression\nRDF Graph"];

Manifestation [label="📙 Manifestation\nTurtle Format"];

Item [label="📕 Item\nPublished TTL File\nhttps://example.org/lib-model.ttl"];

RDFModeling [label="🛠️ Activity\nModeling"];

Serialization [label="🛠️ Activity\nSerialization"];

Upload [label="🛠️ Activity\nUpload"];

Alice [label="👤 Agent\nAlice"];

RDFlib [label="🤖 Agent\nRDFlib"];

WebUploader [label="🤖 Agent\nUploader"];

Work -> Expression [label="prov:wasDerivedFrom"];

Expression -> Manifestation [label="prov:wasDerivedFrom"];

Manifestation -> Item [label="prov:wasDerivedFrom"];

RDFModeling -> Expression [label="prov:generated"];

RDFModeling -> Work [label="prov:used"];

RDFModeling -> Alice [label="prov:associatedWith"];

Serialization -> Manifestation [label="prov:generated"];

Serialization -> Expression [label="prov:used"];

Serialization -> RDFlib [label="prov:associatedWith"];

Upload -> Item [label="prov:generated"];

Upload -> Manifestation [label="prov:used"];

Upload -> WebUploader [label="prov:associatedWith"];

}

Cell In[1], line 1

digraph DigitalWEMI_Provenance {

^

SyntaxError: invalid syntax

You can render this with GraphViz online or via CLI using:

dot -Tpng digital_wemi.dot -o wemi_graph.png

Would you like this diagram exported as an image? Or expanded with time/versioning details?

Prompt

please expand with time and versioning details

Perfect — let’s enrich the GraphViz DOT diagram and SPARQL model with:

Timestamps: when each activity occurred (using

prov:endedAtTime)Versions: modeling multiple Expressions or Manifestations using

dct:hasVersionorowl:versionInfoOptional creation dates for resources (

dct:created)

Expanded GraphViz DOT Diagram (with time + versioning)#

digraph DigitalWEMI_Provenance {

node [shape=box, style=filled, fillcolor="#f0f0f0"];

Work [label="📘 Work\nLibrary Metadata Model\nv1.0"];

Expression [label="📗 Expression\nRDF Graph\nv1.1"];

Manifestation [label="📙 Manifestation\nTurtle Format\nv1.1"];

Item [label="📕 Item\nTTL File\nhttps://example.org/lib-model.ttl"];

RDFModeling [label="🛠️ Activity\nModeling\n2023-10-15"];

Serialization [label="🛠️ Activity\nSerialization\n2023-11-02"];

Upload [label="🛠️ Activity\nUpload\n2023-11-03"];

Alice [label="👤 Agent\nAlice"];

RDFlib [label="🤖 Agent\nRDFlib"];

WebUploader [label="🤖 Agent\nUploader"];

Work -> Expression [label="prov:wasDerivedFrom"];

Expression -> Manifestation [label="prov:wasDerivedFrom"];

Manifestation -> Item [label="prov:wasDerivedFrom"];

RDFModeling -> Expression [label="prov:generated"];

RDFModeling -> Work [label="prov:used"];

RDFModeling -> Alice [label="prov:associatedWith"];

Serialization -> Manifestation [label="prov:generated"];

Serialization -> Expression [label="prov:used"];

Serialization -> RDFlib [label="prov:associatedWith"];

Upload -> Item [label="prov:generated"];

Upload -> Manifestation [label="prov:used"];

Upload -> WebUploader [label="prov:associatedWith"];

// Versioning edges

Work -> WorkPrev [label="dct:replaces"];

Expression -> ExpressionPrev [label="dct:replaces"];

Manifestation -> ManifestationPrev [label="dct:replaces"];

// Ghost nodes for previous versions

WorkPrev [label="📘 Work\nv0.9", style=dashed];

ExpressionPrev [label="📗 Expression\nv1.0", style=dashed];

ManifestationPrev [label="📙 Manifestation\nv1.0", style=dashed];

}

This shows:

Versions of Work/Expression/Manifestation using

dct:replacesTimestamps in activity labels

A full transformation and publication chain with provenance

SPARQL: Version + Time Query#

To query creation time, version info, and activity times:

PREFIX prov: <http://www.w3.org/ns/prov#>

PREFIX dct: <http://purl.org/dc/terms/>

PREFIX owl: <http://www.w3.org/2002/07/owl#>

PREFIX xsd: <http://www.w3.org/2001/XMLSchema#>

SELECT ?item ?manifestation ?expression ?work

?itemVersion ?expressionVersion ?workVersion

?uploadTime ?serializationTime ?creationTime

WHERE {

?item a :Item ;

prov:wasDerivedFrom ?manifestation ;

dct:hasVersion ?itemVersion ;

prov:wasGeneratedBy ?uploadAct .

?manifestation a :Manifestation ;

prov:wasDerivedFrom ?expression ;

dct:hasVersion ?expressionVersion ;

prov:wasGeneratedBy ?serializationAct .

?expression a :Expression ;

prov:wasDerivedFrom ?work ;

dct:hasVersion ?workVersion ;

prov:wasGeneratedBy ?modelingAct .

?work a :Work ;

dct:created ?creationTime .

?uploadAct prov:endedAtTime ?uploadTime .

?serializationAct prov:endedAtTime ?serializationTime .

?modelingAct prov:endedAtTime ?modelingTime .

}

Would you like this rendered as an actual RDF Turtle file or visual image? Or extended to cover multiple concurrent versions (e.g., branching)?

import graphviz

# Expanded DOT graph with time and versioning details

dot = graphviz.Source('''

digraph DigitalWEMI_Provenance {

rankdir=LR;

node [shape=box, style=filled, fillcolor="#f0f0f0", fontname="Helvetica"];

// Current WEMI levels

Work [label="📘 Work\\nLibrary Metadata Model\\nv1.0"];

Expression [label="📗 Expression\\nRDF Graph\\nv1.1"];

Manifestation [label="📙 Manifestation\\nTurtle Format\\nv1.1"];

Item [label="📕 Item\\nTTL File\\nhttps://example.org/lib-model.ttl"];

// Activities with timestamps

RDFModeling [label="🛠️ Modeling\\n2023-10-15"];

Serialization [label="🛠️ Serialization\\n2023-11-02"];

Upload [label="🛠️ Upload\\n2023-11-03"];

// Agents

Alice [label="👤 Alice"];

RDFlib [label="🤖 RDFlib"];

WebUploader [label="🤖 Web Uploader"];

// Connections

Work -> Expression [label="prov:wasDerivedFrom"];

Expression -> Manifestation [label="prov:wasDerivedFrom"];

Manifestation -> Item [label="prov:wasDerivedFrom"];

RDFModeling -> Expression [label="prov:generated"];

RDFModeling -> Work [label="prov:used"];

RDFModeling -> Alice [label="prov:associatedWith"];

Serialization -> Manifestation [label="prov:generated"];

Serialization -> Expression [label="prov:used"];

Serialization -> RDFlib [label="prov:associatedWith"];

Upload -> Item [label="prov:generated"];

Upload -> Manifestation [label="prov:used"];

Upload -> WebUploader [label="prov:associatedWith"];

// Versioning

Work -> WorkPrev [label="dct:replaces"];

Expression -> ExpressionPrev [label="dct:replaces"];

Manifestation -> ManifestationPrev [label="dct:replaces"];

WorkPrev [label="📘 Work\\nv0.9", style=dashed];

ExpressionPrev [label="📗 Expression\\nv1.0", style=dashed];

ManifestationPrev [label="📙 Manifestation\\nv1.0", style=dashed];

}

''')

dot.format = 'png'

dot.render('./digital_wemi_prov', cleanup=False)

dot.view()

Digital WEMI and httpRange-14#

Prompt

Lass uns einen kleinen wissenschaftlichen Aufsatz schreiben. Bitte schreibe auf englisch.

Struktur:

Problemstellung: Kontext ist das httpRange-14 Problem; es ist nicht wirklich zufriedenstellend gelöst; bereits bekannte Ursache des Problems: Unklarheit, ob mit einem URI die Resource oder ihre Repräsentation gemeint ist; weitere, dmals nur unklar erkannte Ursache: mangelnde Differenzierung im Bereich der Repräsentationen; Differenzierung hätte schon damals mit FRBR WEMI vorgelegen: Haben sich die Communities nicht gekannt oder ignoriert? Die Welt hat sich gedreht; seit 2016 ist der FRBR Nachfolger IFLA LRM WEMI ein breit akzeptierter Standard im Bibliothekswesen. Relevanz eines neuen Versuchs der Problemlösung: Wir erleben zunehmend Fake News; zunehmend simulierte, computer- und AI generierte Inhalte; Datenqualität wird zunehmend wichtiger, insbesondere Provenance; Verweis auf PROV-O

Lösungsansatz: Interpretiere das httpRange-14 Problem neu basierend auf IFLA LRM WEMI. Dazu erforderlich: Weiterentwicklung von IFLA LRM WEMI zu einem WEMI digital auf digitale Entitäten. Ansatz: Bsierend auf DOI:10.1007/978-3-642-34222-6_5 eine behutsame Neuinterpretation der WEMI-Begriffe aus IFLA LRM. Vereinbarung: Verwende Slash-URIs nur für Items, also Locations; für Werk, Expression, Manifestation verwende Hash-URIs.

Beispiele bitte aus der Domäne Wetterdaten. Werk: Alle Wetterdaten dieser Welt zu allen Zeiten. genau eine Expression, mit 2 Manifestation in CSV und JSON-LD. Diese Manifestationen liegen nie als gesamtes Dokument vor (dazu sind sie zu groß), aber immerhin in vielfach verteilten Teil-Manifestationen.

Definiere digitales WEMI in etwa so:

Werk = Gedanke, Idee

Expression: Beschreibung oder Modell, charakteristisches Merkmal: Sprache wie DE, EN, aber auch XML, BPMN, UML, abstrakter RDF-Graph, Ontologie; typische Operation: der Mensch liest und Interpretiert das Modell

Manifestation: Codierung der Expression (“Dokument”); charakteristisches Merkmal: Format, Dokument; typische Operation: eine Software erzeugt, liest, editiert, schreibt

Item: Location im Web oder auf der Festplatte etc (“Datei”); charakteristisches Merkmal: Ort; typische Operation: Zugriff, Zugriffsmethoden, Zugriffsrechte etc.

Durchführung: Bitte hier das httpRange-14 Problem mit WEMI digital durchdeklinieren.

Ertrag: Wende PROV-O mit WEMI digital auf die Wetterdaten an.

Hint

(ChatGPT generates article, displayed in the canvas)

Caution

(Answer of ChatGPT went into a wrong direction w.r.t. PROV-O)

Prompt

I don’t agree with your interpretation of prov-o. May I suggest another path of relationship?

Absolutely, I’d love to hear your suggestion. Please go ahead and describe your alternative interpretation or path of relationship regarding PROV-O and digital WEMI. I’m here to help develop or integrate it into our model.

Prompt

provenance should only apply horizontally, i.e. work between work, expression between expression etc.

Hint

(ChatGPT generates revised article)

Excellent observation – and yes, that’s a meaningful refinement to our model.

Your suggestion that provenance should only apply horizontally – i.e., between entities of the same WEMI level (Work–Work, Expression–Expression, Manifestation–Manifestation, Item–Item) – introduces important clarity to the interpretation of PROV-O in the context of Digital WEMI.

Let me know if this reflects your view: …

Hint

(ChatGPT generates revised article)

Would you like me to revise the current draft in the canvas to reflect this interpretation of provenance? I can:

Adjust the PROV-O section to limit it to horizontal relations only,

Introduce structural properties for vertical relationships,

And ensure the example data aligns with this refined model.

Shall I go ahead?

Prompt

yes please

Hint

(ChatGPT generates revised article)

Prompt

in the digital wemi section: please elaborate somewhat on the relationships in wemi (and thus digital wemi). horizontal relationships are especially has_part; vertical are realizes, embodies etc.

Absolutely! Here’s an elaboration to expand the “Digital WEMI” section with a clearer explanation of the horizontal and vertical relationships that structure and interlink the layers within both traditional and digital WEMI:

2.4 Structural Relationships in WEMI and Digital WEMI#

The original FRBR and its successor IFLA LRM define two main types of relationships:

Vertical relationships: These connect the four WEMI layers hierarchically.

Horizontal relationships: These link entities within the same layer.

This distinction is crucial in both library science and the digital modeling of web resources.

Vertical Relationships: Cross-Level Structure#

Vertical links capture how a more concrete layer realizes or embodies a more abstract one. These are not provenance relations (e.g. “wasDerivedFrom”), but structural dependencies. Key predicates:

wemi:realizes: connects an Expression to a Workwemi:embodies: connects a Manifestation to an Expressionwemi:exemplarOf: connects an Item to a Manifestation

In RDF:

:expressionX wemi:realizes :workY .

:manifestationZ wemi:embodies :expressionX .

:item1 wemi:exemplarOf :manifestationZ .

These relationships allow one to traverse the abstraction ladder from file to concept and vice versa.

Horizontal Relationships: Within-Layer Semantics#

Horizontal relationships express part-whole or versioning structures within the same level of WEMI. They are especially important for modeling modularity, updates, and complex digital ecosystems.

Examples:

Work level:

wemi:hasPart: A general climatological model has parts for each continent.wemi:isTranslationOf: A climate policy work translated into another language.

Expression level:

wemi:hasPart: A schema split into modules: metadata, temperature, humidity.wemi:hasVariant: The same model written in UML and OWL.

Manifestation level:

wemi:hasPart: A dataset serialized in daily files.wemi:hasFormat: Same content in JSON-LD and CSV.

Item level:

wemi:hasCopy: A cached file, a local mirror, or a ZIP archive split into parts.

By clearly modeling these relationships, Digital WEMI enables precise, modular, and scalable handling of digital knowledge objects—especially useful for large, distributed datasets like weather information.

Would you like me to integrate this expansion directly into your document in the canvas?

Prompt

yes please

Hint

(ChatGPT generates revised article)

Prompt

please move wemi:isTranslationOf to the expression level, because it is on language

Hint

(ChatGPT generates revised article)

Done! I’ve moved wemi:isTranslationOf from the Work level to the Expression level, since it’s about language and modeling. Let me know if you’d like to adjust anything else or explore other relationships.

Dublin Core#

Prompt

We already have: FRBR WEMI is hierarchical and precise — ideal for rich library metadata, archival description, and linked data. Let’s now mention also Dublin Core in our theory. DC is flat and general — great for cross-domain interoperability. Let’s map the properties of DC to the various levels of WEMI. Please make explicit, where the mapping is clear, and where there are inherent ambiguities.

Hint

(ChatGPT generates revised article)

Prompt

FRBR WEMI was built based on an entity relationship modelling technique. However, WEMI may also result from a database normalization. We then would take “flat” Dublin Core as a database in non first normal form, and wemi as a database in 3rd normal form, correct?

Yes, you’re absolutely right to draw that comparison — and it’s a very insightful one.

Dublin Core (DC) can indeed be seen as a kind of denormalized metadata schema: it’s flat, general-purpose, and aimed at maximum simplicity and interoperability. From a data modeling perspective, it resembles a database in non-first normal form (NFNF) or at best 1NF, where data redundancy and semantic overlap are tolerated for the sake of ease of use and broad applicability.

By contrast, FRBR WEMI — and especially your proposed Digital WEMI — behaves much like a database that has undergone normalization up to 3NF:

1NF removes repeating groups and ensures atomicity → Similar to splitting ambiguous

dc:identifierinto separate URIs for Work, Expression, etc.2NF removes partial dependencies → Analogous to assigning metadata properties (like language, format) only to the appropriate WEMI level.

3NF removes transitive dependencies → Ensures that provenance and structural relationships (e.g.,

prov:wasDerivedFrom,wemi:realizes) are handled in orthogonal layers.

So yes, Digital WEMI can be viewed as the normalized form of metadata that was formerly expressed in flat DC. It separates concerns clearly and distributes metadata properties across distinct semantic levels, reducing ambiguity and improving expressiveness — exactly what normalization is meant to achieve in database design.

Would you like to include a short comparative table or a paragraph in the article that explicitly frames this analogy? It could help bridge understanding between the digital library, semantic web, and database communities.

Prompt

yes please

Hint

(ChatGPT generates revised article

I’ve added a new section 2.6 WEMI as Database Normalization that frames Dublin Core as a flat, less normalized schema (like 1NF) and WEMI as a highly normalized one (3NF), aligning with your idea. Let me know if you’d like to add visual examples or expand on the implications for data design!

Prompt

please elaborate more this new section, i.e. database normalization w.r.t. DC

Great! Let’s expand Section 2.6: WEMI as Database Normalization to better articulate how this analogy works—especially in relation to Dublin Core and its limitations when compared to a normalized WEMI structure. Here’s the expanded version of the section, ready to be merged into your document:

2.6 WEMI as Database Normalization (Expanded)#

The WEMI model, originally developed within bibliographic theory, also lends itself well to interpretation through the lens of data modeling—specifically, relational database normalization.

Flat Metadata: Dublin Core as 1NF or Pre-NF#

Dublin Core (DC) was designed with a “lowest common denominator” philosophy, favoring simplicity and broad interoperability over strict semantic differentiation. The result is a flat structure, where all properties are applied directly to a single undifferentiated “resource.” For example:

<resource>

dc:title "Global Weather Data 2024" ;

dc:creator "World Meteorological Org" ;

dc:format "application/json" ;

dc:language "en" ;

dc:identifier "https://example.org/weather" .

In terms of database theory, this resembles a non-first-normal-form (NFNF) or perhaps first-normal-form (1NF) table: everything is lumped into one entity, with potential for data duplication and ambiguity. For instance:

identifier |

title |

creator |

language |

format |

|---|---|---|---|---|

Global Weather Data |

World Meteorological Org |

en |

application/json |

This representation lacks clarity about which entity each attribute refers to—e.g., is the title of the Work or the Manifestation? What exactly does the identifier identify?

Normalized Semantics: WEMI as 3NF#

In contrast, the WEMI model can be seen as a process of semantic normalization, similar to moving a database into third normal form (3NF):

Entities (Work, Expression, Manifestation, Item) are clearly separated.

Dependencies are explicitly defined via relationships.

Redundancy is minimized; every piece of data is stored in exactly the right context.

Here’s a conceptual breakdown as a normalized schema:

Work Table

work_id

title

creator

w1

Global Weather Data

World Meteorological Org

Expression Table

expression_id

work_id

language

e1

w1

en

Manifestation Table

manifestation_id

expression_id

format

m1

e1

application/json

m2

e1

text/csv

Item Table

item_id

manifestation_id

uri

i1

m1

i2

m2

This decomposition aligns directly with WEMI logic:

dc:title belongs at the Work level.

dc:language belongs at the Expression level.

dc:format applies to the Manifestation.

dc:identifier finally makes sense at the Item level (e.g., the resolvable URI).

Semantic Gains#

The benefits of viewing WEMI as normalization include:

Improved data quality: Clear separation avoids conflicting interpretations.

Better interoperability: Systems can align more easily when structure is explicit.

Enriched provenance: Provenance assertions become more precise when scoped to the correct level (e.g., who translated the Expression vs. who published the Manifestation).

Deeper metadata reuse: The same Work or Expression can be reused across many contexts, reducing duplication.

Conclusion#

Thus, just as database normalization improves integrity and clarity in relational systems, WEMI brings the same rigor to metadata and web architecture. Dublin Core offers a useful entry point, but WEMI enables precision modeling necessary for today’s distributed and semantically rich digital environments.

Would you like me to integrate this into your current document in the canvas?

Prompt

yes please

Hint

(ChatGPT generates revised article

I’ve elaborated the section on WEMI as database normalization, showing how Dublin Core can be seen as a flat, unnormalized structure, while WEMI corresponds to a semantically normalized 3NF model. Let me know if you want visual aids (like ER diagrams) or examples to further clarify this comparison!

Compare DC and WEMI#

Prompt

please add a Comparison Table, like:

Concept |

Dublin Core |

FRBR / WEMI |

|---|---|---|

Structure |

Flat |

Entity-relationship |

Normalization level |

NFNF or 1NF |

3NF |

Redundancy |

High |

Minimal |

Disambiguation of roles |

Poor (e.g., “Contributor”) |

High (Creator vs Realizer vs Producer) |

Relationships modeled? |

Implicit or vague |

Explicit and formalized |

Suited for Linked Data? |

Weak |

Strong |

Hint

(ChatGPT generates revised article

Promt#

Prompt

Please generate an elaborated prompt which ist able to recreate the text you have crafted so far.

Sure! Here’s a comprehensive and elaborated prompt that you (or anyone else) can use to regenerate the text and structure we’ve developed so far:

Prompt:

Write a scholarly article of approximately 3000 words exploring a reinterpretation of the httpRange-14 problem in light of bibliographic modeling, specifically using a “Digital WEMI” perspective. Begin with a problem statement that introduces the httpRange-14 issue and how it arises from ambiguities between resources and representations on the web. Highlight that deeper confusion lies in the undifferentiated notion of representation and that the FRBR WEMI model already offered distinctions (Work, Expression, Manifestation, Item) that could help clarify this.

Propose a “Digital WEMI” interpretation where:

Work = abstract idea or conceptual content

Expression = a description or model of the work (e.g., natural language, UML, RDF)

Manifestation = the encoded version or document format (e.g., JSON-LD, PDF, HTML)

Item = the actual file or web resource at a given URI

Suggest using hash URIs for Work, Expression, Manifestation, and slash URIs only for Items.

Include a detailed table defining Digital WEMI levels and how they map to digital operations and formats. Use the domain of weather data as a running example: e.g., a global weather dataset as a Work, a conceptual RDF model as an Expression, different formats (CSV, JSON-LD) as Manifestations, and files at specific URLs as Items.

Discuss vertical (realizes, embodies, exemplarOf) and horizontal (hasPart, isTranslationOf, hasFormat, hasVariant) relationships within WEMI and how these apply in the digital context.

Then, compare Dublin Core with FRBR/WEMI. Emphasize Dublin Core’s simplicity, flatness, and broad interoperability versus WEMI’s semantic richness and structure. Provide a detailed comparison table showing how Dublin Core elements map (clearly or ambiguously) to WEMI levels.

Reinterpret the relationship using database normalization theory:

Consider Dublin Core as flat, non-normalized metadata (NFNF or 1NF)

Describe WEMI as a semantically normalized model akin to 3NF in relational databases

Explain how WEMI reduces redundancy and clarifies roles

Conclude with a reflection that Digital WEMI doesn’t change the web architecture but clarifies it. Emphasize how this interpretation enhances provenance, improves linked data modeling, and allows better alignment between the web, bibliographic standards, and data modeling practices. Prepare the article as if for an academic audience familiar with metadata theory, linked data, and semantic web principles.

Prompt

fine! lets go back to the article, ok?

Perfect — we’re back in the article! We had just started section 3: Execution: httpRange-14 with Digital WEMI. Do you want me to continue elaborating that section now?

If so, here’s a quick preview of how we could structure it further:

3. Execution: httpRange-14 with Digital WEMI#

In this section, we walk through how Digital WEMI resolves the httpRange-14 ambiguity step by step using concrete examples, particularly from the weather data domain. We clarify:

What each URI identifies

How HTTP behavior should be interpreted

How provenance is applied horizontally across each WEMI level

Let me know if you’d like me to proceed with this outline — or if you’d like to adjust or focus on a particular aspect first.

Prompt

yes, please elaborate as suggested. However, we are now allowed to use 3000 words

Caution

After some more 5 dialogue exchanges, it was not possible any more to get the whole article as a whole. Finally I asked:

Prompt

please show the whole article in the canvas

Caution

and got the answer failed to edit. However, the result until then is available at Article: Digital WEMI / httpRange-14.